Feature importance tells you how each data field affected the model's predictions. In this case, age, account size, and account age are features. For example, to predict credit risk, data fields for age, account size, and account age might be used.

In machine learning, features are the data fields used to predict a target data point. Complete a regulatory audit of an AI system to validate models and monitor the impact of model decisions on humans.Build end user trust in your model’s decisions by generating local explanations to illustrate their outcomes.Uncover potential sources of unfairness by understanding whether the model is predicting based on sensitive features or features highly correlated with them.Approach the debugging of your model by understanding it first and identifying if the model is using healthy features or merely spurious correlations.Determine how trustworthy your AI system’s predictions are by understanding what features are most important for the predictions.The capabilities of this component are founded by InterpretML capabilities on generating model explanations.

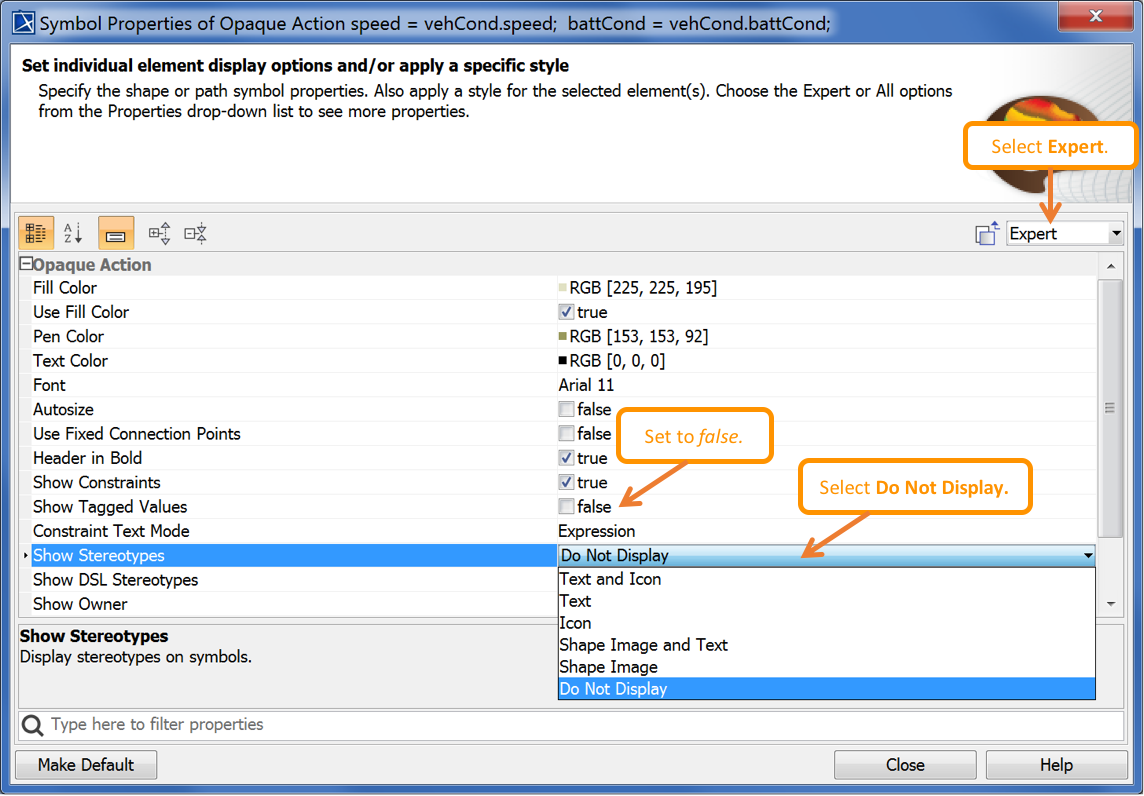

#Opaque action modelio full

The local explanation tab of this component also represents a full data visualization which is great for general eyeballing the data and looking at differences between correct and incorrect predictions of each cohort. This is valuable when, for example, assessing fairness in model predictions for individuals in a particular demographic group. One can also observe model explanations for a selected cohort as a subgroup of data points.

It provides multiple views into a model’s behavior: global explanations (e.g., what features affect the overall behavior of a loan allocation model) and local explanations (e.g., why a customer’s loan application was approved or rejected). The interpretability component of the (LINK TO CONCEPT DOC RESPONSIBLE AI DASHBOARD) contributes to the “diagnose” stage of the model lifecycle workflow by generating human-understandable descriptions of the predictions of a Machine Learning model.

Interpretability helps answer questions in scenarios such as model debugging (Why did my model make this mistake? How can I improve my model?), human-AI collaboration (How can I understand and trust the model’s decisions?), and regulatory compliance (Does my model satisfy legal requirements?). When machine learning models are used in ways that impact people’s lives, it is critically important to understand what influences the behavior of models. Why is model interpretability important to model debugging? With the release of the Responsible AI dashboard which includes model interpretability, we recommend users to migrate to the new experience as the older SDKv1 preview model interpretability dashboard will no longer be actively maintained.

0 kommentar(er)

0 kommentar(er)